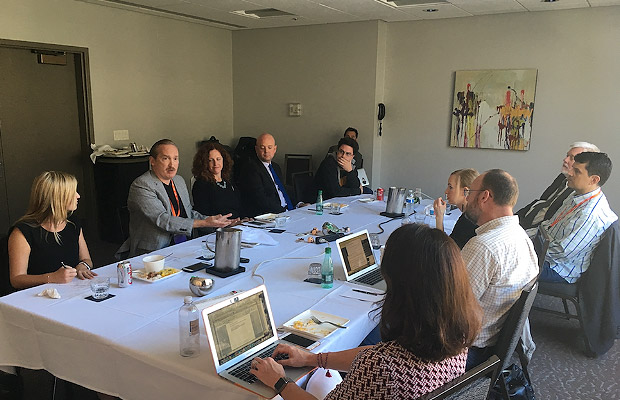

Recently, Riak held a roundtable discussion in San Francisco with Riak’s CEO Adam Wray and some of our technology partners, including Mac Devine, vice president and CTO of emerging technology at IBM; and Benjamin Wilson, product line technical director at Raytheon; as well as national media and analysts, including Rachel King from The Wall Street Journal; Annie Gaus, formerly of San Francisco Business Times; Doug Dineley from InfoWorld; Alex Handy of SD Times [see Alex’s article here], and Carl Olofson from IDC. The conversation focused on some of the technology challenges and promising rewards presented by IoT initiatives. Many initiatives are still in the testing or experiment stage and haven’t yet been fully deployed. Riak organized the roundtable as a way to uncover some of the ways companies have taken IoT initiatives from concept to large-scale implementation.

IBM and Raytheon are especially qualified to speak to IoT deployments as they have significant IoT projects in production. Their leadership teams have intricate knowledge of the tools, such as distributed databases, and strategy necessary to derive value from IoT. Both organizations have shown the ability to produce at scale, which suggests they have the processes and the tools that have enabled them do so.

To help illustrate how innovative companies are making the most of IoT capabilities, we’ve recapped the discussion in a 3-part series that highlights some of those tools and strategies that are proven to help move IoT initiatives from early stage to production ready, value producing initiatives.

Cognitive IoT at the Edge

Mac Devine, vice president and CTO of emerging technology at IBM, spoke about the idea of using cognitive learning to generate value at the network edge: “Often, when people talk about IoT, they focus on connectivity. They focus on tools and processes around messaging and the queuing. The problem with this approach is that it does not hone in on the real value of IoT: taking the data and generating cognitive insight in real time. At IBM we are working to capture data where it is and figure how to analyze it in real time.”

“When we talk about capturing data where it is, we’re talking about decentralized architectures, where sensors and devices are not only spread out across disparate geographies but also often have enough compute power to process data locally. This focus on processing data at intelligent edges so that you can gain real-time business insight actually led to the Weather Company acquisition. A decentralized approach allows IBM to gather data and ingest that data wherever the data is, and then move cognitive algorithms toward the data”: edge computing.

A decentralized approach allows IBM to gather data and ingest that data wherever the data is, and then move cognitive algorithms toward the data: edge computing.

This type of architecture used by The Weather Company allows a scale that was unimaginable even three to four years ago. Today, organizations have to change architectural models to include real-time data replication and technology selections around distributed databases to allow two things:

- A centralized aggregate view of all the data sources.

- Deployment of intelligence (data ingest and analysis, even cognitive algorithms) out near the edge (of a network, close to the sensors and devices) to enable real-time decision making.

Devine continues: “The great promise with IoT is that it offers spontaneous innovation at every edge location with a view across all the edges. Then with that view and the understanding of what’s happening around a network as a whole, organizations can then pass that insight back to the edge so the edge becomes more intelligent. This sort of back and forth interaction creates a cognitive loop between the backend systems and the edge allowing greater real-time business insight.”

We can think of decentralized networks as east-west, point-to-point architectures. Each edge location with analysis and data ingest interacts with the other edge locations, as well as the centralized control point. This kind of topology helps organizations consume more of their own data as well as allowing them to combine data from other by service providers. It lets them tap into the real-time insight made possible by decentralized architectures.

Adam Wray looks at why distributed architectures help tap into this scale. A lot of companies, such as companies in the automotive sector start by “using their own infrastructure and deploying collection stations. Then they try and bring that data back to the core and then do analysis to get some insight into what’s happening in vehicles. With IoT the challenge is on. Now they are thinking, ‘how do I do that with 10 million cars, and how do I start to turn all that data I can gather from tire pressure, braking and acceleration performance etc. etc. and turn it into actionable data in the field?’”

Benjamin Wilson, product line technical director at Raytheon, spoke about “how the new level of IoT edge capability to perform intelligently distributed scalable processing enables new use cases and valuable insights previously not possible in many markets.“ Raytheon’s portfolio contains products sensing, consuming and processing a tremendous amount of real-time information processed locally at the tactical edge. One key trend to help better address the evolving landscape is a new deeper level of distributed capabilities across our networks, missions, markets and systems. To do that, we are working with this open team to much more flexibly enable meaningful operational capabilities to our end customers.

“…intelligently distributed scalable processing enables new use cases and valuable insights previously not possible in many markets.”

Wilson continues: There are many lessons learned between defense and commercial industries. With IoT capabilities, we can dynamically securely route specific processed data where it specifically needs to be processed, turn the data into consistent normalized information across sources/things, and, using scaled reasoning, form cognitive wisdom which enables better distributed decisions. To build such a system a balance of systems engineering, business prioritization, technical capabilities and governance across all system stakeholders/components must be sustained. With IoT and the edge, there is both great potential and added complexity introduced by the extended range of stakeholders, challenges, components, vendors and technologies. IoT capabilities if driven successfully can enable technology and process flexibility in executing system architectures yielding a higher ROI.

Read more about Riak’s thoughts on IoT and Edge Analytics, read Adam Wray’s article 2017 Will be the launchpad for IoT analytics as published in Sand Hill. If you’d like to learn more about Building Scalable IoT Apps Using OSS Technologies please watch Pavel Hardak’s 2016 QCon San Francisco presentation in the Riak Video Playlist.

Look for Part 2 in this series Enabling Decisions Based on Factual Data .